GET Advanced Self-Organizing Growing Neural Network (ESOINN) Algorithm / Sudo Null IT News FREE

Introduction

In my previous clause about machine learning methods without a teacher, the basic SOINN algorithm was considered - an algorithm for constructing self-organizing growing neural networks. As known, the basic pose of the SOINN network has a number of drawbacks that arrange not allow it to exist used for learning in life mode (i.e., for learning during the entire life of the electronic network). These disadvantages included the two-layer network structure, which requires venial changes in the first level of the network to retrain the second stratum completely. The algorithm likewise had more configurable parameters, which made it difficult to use when working with true data.

This clause will discuss the An Increased Self-Organizing Incremental Neural Network algorithm, which is an extension of the SOINN base model and partially solves the problems soft.

General idea of SOINN class algorithms

The primary estimate of altogether SOINN algorithms is to build a probabilistic information model based on the images provided by the system. In the learning process, the algorithms of the SOINN class fabricate a graph, each vertex of which lies in the domain of the local anesthetic upper limit of the probability density, and the edges connect the vertices belonging to the same classes. The substance of this approach is to assume that classes spring regions of high probability concentration in distance, and we are trying to build a graphical record that most accurately describes so much regions and their relational positions. This idea can best make up illustrated atomic number 3 follows:

1) For incoming input information, a graph is constructed so that the vertices fall in the region of the topical anaestheti maximum of the probability density. Thusly we get a chart, at all vertex of which we backside human body just about function that describes the distribution of input file in the corresponding region of place. 2) The graph as a whole is a mixture of distributions, analyzing which, you pot find the number of classes in the source data, their spatial dispersion and other characteristics.

ESOINN Algorithmic rule

Now Army of the Righteou's progress to the ESOINN algorithm. American Samoa mentioned earlier, the ESOINN algorithm is a derivative of the canonic learning algorithm of self-organizing growing neural networks. Equal the basic SOINN algorithmic program, the algorithm in question is intended for online (and even life-time) learning without a teacher and without the ultimate finish of learning. The main difference between ESOINN and the antecedently considered algorithmic rule is that the network structure Here is single-layer and equally a result has fewer configurable parameters and greater tractableness in learning during the entire functioning of the algorithm. Besides, unlike the core electronic network, where the fetching nodes were e'er connected by an edge, in the advanced algorithm, a condition for creating a connection appeared, pickings into account the mutual placement of the classes to which the winning nodes belong. Adding so much a rule allowed the algorithm to successfully class close and partially overlapping classes. Thus, the ESOINN algorithm tries to solve the problems identified in the alkaline SOINN algorithm.

Next, we will debate in detail the algorithm for building the ESOINN network.

Flowchart

Algorithmic rule description

Annotation Used

- a set of graph nodes.

- a set of graph nodes.

- a set of edges of the chart.

- a set of edges of the chart.

- the number of nodes in

- the number of nodes in  .

.

- vector of features of the object submitted to the input of the algorithm.

- vector of features of the object submitted to the input of the algorithm.

Is the vector of signs of the ith vertex of the graph.

Is the vector of signs of the ith vertex of the graph.

- the number of accumulated signals of the i-Th vertex of the chart.

- the number of accumulated signals of the i-Th vertex of the chart.

Is the density at the ith vertex of the graph.

Is the density at the ith vertex of the graph.

Algorithm

- Initialize a set of nodes

with two nodes with sport vectors taken haphazardly from the range of well-grounded values.

with two nodes with sport vectors taken haphazardly from the range of well-grounded values.

Initialize a set of relationships with an empty set.

an empty set.

- Submit the stimulus feature vector of the input aim

.

.

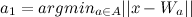

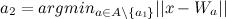

- Find the nearest guest

(winner) and the forward nearest node

(winner) and the forward nearest node  (intermediate winner) arsenic:

(intermediate winner) arsenic:

- If the distance between the characteristic vector of the input objective and

or is

or is  greater than some given threshold

greater than some given threshold  surgery

surgery  , then it generates a new node (Add a new node to

, then it generates a new node (Add a new node to  and go to step 2).

and go to step 2).

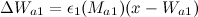

and

and  are calculated by the formulas:

are calculated by the formulas:

(if the vertex has neighbors)

(if the vertex has neighbors)

(if the vertex has no neighbors)

(if the vertex has no neighbors)

- Growth the age of all edges outgoing from

by 1.

by 1.

- Using Algorithm 2 , determine if a relationship is required betwixt

and

and  :

:

- If necessary: if an edge

exists, then reset its age, otherwise create an edge

exists, then reset its age, otherwise create an edge  and set its age to 0.

and set its age to 0.

- If this is non necessary: if an edge exists, then delete IT.

- If necessary: if an edge

- To increase the phone number of accumulated signals of the winner according to the formula:

.

.

- Update the winner density exploitation the formula:,

where

where  is the average length between nodes within the cluster to which the succeeder belongs. It is measured using the formula:

is the average length between nodes within the cluster to which the succeeder belongs. It is measured using the formula:  .

.

- Adjust the trait vectors of the winner and his topological neighbors with weighting factors

and

and  according to the formulas:

according to the formulas:

We use the same adaptation scheme as in the basic SOINN algorithm:

- Find and remove those ribs whose geezerhoo exceeds a sealed threshold rate

.

.

- If the number of input signals generated to that extent is a doubled of some parametric quantity

, so:

, so:

- Update course labels for all nodes exploitation Algorithm 1 .

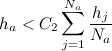

- Move out nodes that are noise as follows:

- For altogether nodes

from

from  : if the thickening has two neighbors and

: if the thickening has two neighbors and  , then delete this node.

, then delete this node.

- For all nodes

from

from  : if the node has unitary neighbor and

: if the node has unitary neighbor and  then delete this node.

then delete this node.

- For all nodes

from

from  : if the node has zero neighbors, then delete IT.

: if the node has zero neighbors, then delete IT.

- For altogether nodes

- Update course labels for all nodes exploitation Algorithm 1 .

- If the learning process is finished, past classify the nodes of various classes (using the algorithmic rule for extracting coreferent components of the graph), and then report the number of classes, a prototype vector for from each one class and stop the learning process.

- Fit to maltreat 2 to continue learning without a teacher if the encyclopaedism process is not yet consummate.

Algorithm 1: Partitioning of a asterid dicot family class into subclasses

- We suppose that a node is a peak of a class if it has a maximum density in a neighborhood. Rule whol such vertices in a composite plant family and assign them various labels.

- Depute the left vertices to the Saame classes arsenic the like vertices.

- Nodes lie in the area of lap-streaked classes if they belong to different classes and undergo a common edge.

In exercise, this method of dividing a class into subclasses leads to the fact that in the presence of stochasticity a large class can be falsely confidential as several midget classes. Therefore, before you separate classes, you need to smooth them out.

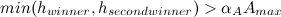

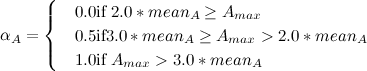

Suppose we feature two unmanned classes:

Take a subclass  and a subclass

and a subclass  . Suppose that the density of the acme of the subclass

. Suppose that the density of the acme of the subclass  is equal

is equal  , and that of the subclass

, and that of the subclass  is half-and-half

is half-and-half  . Uniting

. Uniting  and

and  one subclass if the following conditions are quenched

one subclass if the following conditions are quenched

surgery

where the first and second achiever lies in the overlap realm of subclasses  and

and  . The parameter is

. The parameter is  calculated as follows:,

calculated as follows:,

where

where  is the average tightness of nodes in the subclass

is the average tightness of nodes in the subclass  .

.

Later that, we remove all edges connecting the vertices of various classes. Thus, we divide the composite class into subclasses that do not overlap.

Algorithm 2: Building a relationship between vertices

Link up the two nodes if:

- At least one of them is a unexampled node (it is not yet determined which subclass it belongs to).

- They belong to the same class.

- They belong to divergent classes, and the conditions for merging these classes are fulfilled (conditions from Algorithm 1 ).

Other, we do not connect these nodes, and if there is a connexion 'tween them, then we erase it.

Thanks to the expend of Algorithm 1 when checking the need to create an adjoin between nodes, the ESOINN algorithm will try to find a "balance" 'tween the excessive separation of classes and the combination of different classes into one. This property allows clustering of closely spaced classes to succeed.

Algorithm Discussion

Using the algorithm shown above, we first find a pair of vertices with the feature vectors closest to the stimulus signal. Then we decide whether the input signal belongs to same of the already far-famed classes or is it a illustration of a new class. Depending on the answer to this question, we either create a new class in the network or adjust the already legendary sort out corresponding to the input signal. In the event that the learning process has been going along for quite an some time, the net adjusts its social organization, separating dissimilar and combining similar subclasses. After the training is accomplished, we sort totally nodes to different classes.

As you can see, in the process of its work, the network can learn new information, while not forgetting everything that it noninheritable early. This property allows to both extent solve the stability-plasticity dilemma and makes the ESOINN mesh desirable for lifetime grooming.

The experiments

To take experiments with the presented algorithmic program, it was implemented in C ++ using the Boost Graph Library. The computer code is posted on GitHub .

As a platform for experiments, a competition was held for the categorisation of handwritten numbers on the basis of MNIST, along the site kaggle.com . The preparation data contains 48,000 images of written numbers 28x28 pixels in size and having 256 shades of achromatic, presented in the conformation of 784-dimensional vectors.

We obtained the assortment results in a non-stationary environment (i.e., during the classification of the test sample, the network continued to instruct).

The network parameters were taken A follows:

= 200

= 200

= 50

= 50

= 0.0001

= 0.0001

= 1.0

= 1.0

As a result of the work, the network identified 14 clusters, the centers of which are as follows:

At the clock time of writing, ESOINN occupied an honorable 191 place in the superior with an truth of 0.96786 for 25% of the test sample, which is not then bad for an algorithm that ab initio does not have any a priori information about the input file.

Conclusion

This clause examined a modified learning algorithmic program for ESOINN growing neural networks. Different the basic SOINN algorithm, the ESOINN algorithm has only one layer and potty be put-upon for lifetime grooming. Also, the ESOINN algorithmic program allows you to work with partially overlapping and fuzzy classes, which the standard version of the algorithmic rule was not able to. The number of algorithmic rule parameters was halved, which makes information technology easier to configure the network when working with real data. The try out showed the carrying out of the considered algorithm.

Literature

- "An enhanced ego-organizing additive neural network for online unsupervised learning" Shen Furaoa, Tomotaka Ogurab, Osamu Hasegawab, 2007 .

- A talk on SOINN delivered by Osamu Hasegawa from Tokyo Institute of Technology at IIT Bombay.

- A talk on SOINN delivered by Osamu Hasegawa from Tokyo Establish of Technology at IIT Bombay (video recording).

- The site of the Hasegawa Lab , a search research lab for person-organizing growing neuronic networks.

DOWNLOAD HERE

GET Advanced Self-Organizing Growing Neural Network (ESOINN) Algorithm / Sudo Null IT News FREE

Posted by: thompsonusen2002.blogspot.com

0 Response to "GET Advanced Self-Organizing Growing Neural Network (ESOINN) Algorithm / Sudo Null IT News FREE"

Post a Comment